Lehigh Underwater Robotics

Software Team LeadI worked on a research project designing and building an autonomous underwater drone. The team was divided into four sub-teams, business, mechanical, electrical, and software. As the leader of the software team, I was integral to the project's success and the systems that I designed and built were the basis for the operation of the drone.

We participated in Robonation's AUV competition, Robosub. The competition had a series of tasks in an obstacle course style that competitors needed to complete. The drone used, needed to be completely submerged in the pool throughout the run and could have no outside communication.

My repository, containing latest code and ideas, lives here

The public repositories are in a github organization here

And our readthedocs powered documentation is here

Part 1: Before and during competition

Onboard electronics

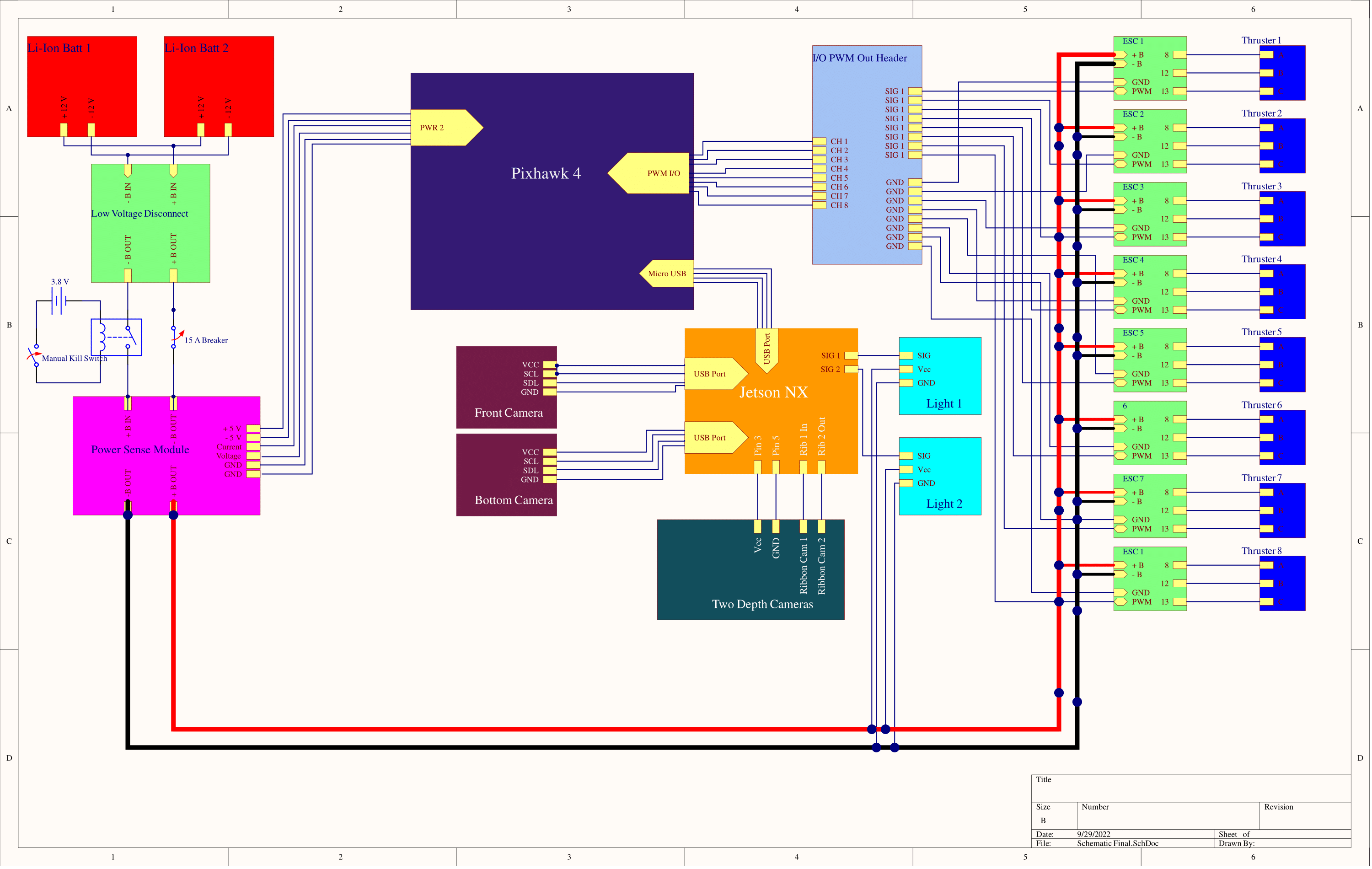

We use an Nvidia Jetson Xavier NX for our main onboard computer. This connected to a PixHawk 4, which is used to get IMU data and to talk to the ESCs that control the thrusters. Two usb cameras are connected to the Jetson, one facing forward and the other facing downward. There is also a stereo camera facing forward, connected to the jetson with ribbon cables.

Electrical schematic, created by the electrical team

Image recognition

The stereo camera reads in two frames, one left and one right, and calculates the depth map of the image. This helps give us a realistic depth approximation. this is done with the help of OpenCV.

We also need to be able to detect certain classes of images. The other software team member trained a YOLO model. I wrote a ROS node that would use this model on frames read in using OpenCV and publish a message if an object was detected. This runs on the Jetson's GPU to allow for fast image processing.

// load the class list of our model

ifstream ifs("/home/lur/model/obj.names");

string line;

while (getline(ifs, line)) {

class_list.push_back(line);

}

// load model

net = readNet("/home/lur/model/yolo-obj_final.weights",

"/home/lur/model/yolo-obj.cfg");

// target the GPU

net.setPreferableTarget(DNN_TARGET_CUDA);

net.setPreferableBackend(DNN_BACKEND_CUDA);

// create subscriber and publisher for communication

camera_pub = this->create_publisher("/lur/camera");

brain_sub = this->create_subscription("/brain", &Camera::brain_sub_callback);

// opening our video captures

VideoCapture fc;

VideoCapture bc;

if (!fc.open(front_camera_id)) {

printf("ERROR: Unable to open front camera with id: %d\n", front_camera_id);

}

if (!bc.open(bottom_camera_id)) {

printf("ERROR: Unable to open bottom camera with id: %d\n", bottom_camera_id);

}

// creating a timer to run at 2hz

timer = this->create_wall_timer(500ms, &Camera::timer_callback);

The timer callback looks like this,

void timer_callback() {

// failsafe to kill execution after timeout

if (this->++count > this->timeout * 2) exit(1);

// in practice, we only captured when instructed

// this code is for testing and data collection

if (!this->front_capturing) {

if (!this->front_detected) detect_front();

this->front_detected = false;

}

if (!this->bottom_capturing) {

if (!this->bottom_detected) detect_bottom();

this->bottom_detected = false;

}

To easily communicate image detections between nodes, we created a custom ROS message type for the information of the recognized image. It looks like this,

std_msgs/Header header

uint32 class_id

float64 confidence

uint32 x

uint32 y

uint32 width

uint32 height

Robot operating system

We have the following ROS nodes: brain, camera, sub, test, and lur. The lur package creates a namespace containing some typedefs, constants, and helper functions.

Thread safe queue

I created a thread safe queue implementation that we use in several places for safely managing data transfer and message requests.

#ifndef TS_QUEUE

#define TS_QUEUE

#include

#include

#include

template

class TSQueue {

public:

TSQueue(): q(), mtx(), cv() { printf("EventQueue constructor\n"); };

void enqueue(T e) {

std::lock_guard lck(mtx);

q.push(e);

cv.notify_one();

};

T dequeue() {

std::unique_lock lck(mtx);

cv.wait(lck, [this]{return !q.empty();});

T e = q.front();

q.pop();

return e;

};

int pop() {

std::unique_lock lck(mtx);

if (q.empty()) {

return -1;

}

q.pop();

return 0;

};

int size() {

std::unique_lock lck(mtx);

return q.size();

};

T front() {

std::unique_lock lck(mtx);

cv.wait(lck, [this]{return !q.empty();});

return q.front();

};

T back() {

std::unique_lock lck(mtx);

cv.wait(lck, [this]{return !q.empty();});

return q.back();

};

bool empty() {

std::unique_lock lck(mtx);

return q.empty();

};

private:

std::queue q;

mutable std::mutex mtx;

std::condition_variable cv;

};

#endif

Tools and tests

Part 2: New Drone

After the competition, my team leader, the electrical lead, and I decided we wanted to create a new drone with a fully custom design. Our goal was to build a system from the ground up by designing PCBAs with sensors and a microcontroller instead of a flight controller unit (FCU). To do this it required a ton of testing components and software design considerations.

Teensy:We decided to replace the Pixhawk flight controller with a custom solution. We use a Teensy 4.1 to control thrusters, read sonar values, as well as gathering data from an IMU. I wrote a library to manage all of this needed functionality.

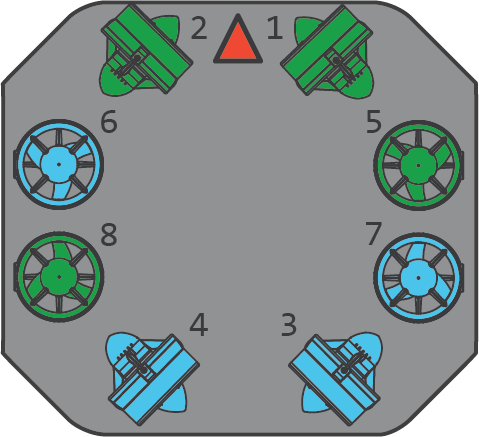

Thrusters:We used a configuration matrix to create the correct thruster power vectors based on their orientations and the dynamics of the vehicle underwater. It is designed on this orientation

Config Matrix

----------------------------------

| | 1 2 3 4 5 6 7 8 |

----------------------------------

| x | . . . . . . . . |

| y | . . . . . . . . |

| z | . . . . . . . . |

| roll | . . . . . . . . |

| pitch | . . . . . . . . |

| yaw | . . . . . . . . |

----------------------------------

const float thruster_config[6][8] = {

{ 1.0, 1.0, -1.0, -1.0, 0.0, 0.0, 0.0, 0.0 },

{ 1.0, -1.0, 1.0, -1.0, 0.0, 0.0, 0.0, 0.0 },

{ 0.0, 0.0, 0.0, 0.0, 1.0, 1.0, 1.0, 1.0 },

{ 0.0, 0.0, 0.0, 0.0, -1.0, 1.0, -1.0, 1.0 },

{ 0.0, 0.0, 0.0, 0.0, 1.0, 1.0, -1.0, -1.0 },

{ -1.0, 1.0, 1.0, -1.0, 0.0, 0.0, 0.0, 0.0 }

};

As an example, to calculate the power value for thruster 5 going in the z direction (ascend/descend) at a power value p, we multiply p by the value in the 3rd row, 5th column.

Sonar:At the competition, we won a sonar from Blue Robotics. It uses their open source ping protocol. We use a C++ library to communicate with the sonar.

IMU: Communication with main computer (Nvidia Jetson):